Developing an artificial intelligence-based clinical decision-support system for chest tube management: user evaluations & patient perspectives of the Chest Tube Learning Synthesis and Evaluation Assistant (CheLSEA) system

Highlight box

Key findings

• While survey results show overall positive outlook on the usefulness of CheLSEA, end-users identified challenges with the interface design and major themes to help facilitate clinical integration.

• Open communication, education, and surgeon’s trust in the system, were factors that influenced patient’s trust in the AI-CDSS.

What is known and what is new?

• There is scant literature on perceptions and considerations that underlie AI-based technologies’ clinical adoption by healthcare professionals and patient acceptance. Engaging end users in the development of clinical decision systems can increase clinical adoption.

• This study addresses usability challenges and incorporates patient views into AI-CDSS implementation.

What is the implication, and what should change now?

• Improved interface design and ongoing evaluation are crucial for AI-CDSS adoption and integration into clinical workflow. Evaluating patients’ perceptions are necessary to build trust in AI supported recommendations and alleviate concerns about its role.

• Researchers developing AI-CDSS with an interface can benefit from the challenges and mitigation strategies identified in this study.

Introduction

Post-operative pulmonary complications, such as pleural effusion or prolonged air leak (PAL), extended hospital stay and increased morbidity, are among many challenges relevant to chest tube management (1,2). Chest tube insertion is a routine procedure used to drain air and liquid from the pleural space after lung surgery (3). Despite various studies striving towards improved chest tube management (4), the clinical decision-making surrounding the optimal duration of chest tube drainage continues to be challenging for healthcare teams, even when using digital pleural drainage monitoring (5). Heterogeneity of post-operative lung resection complications, chest tube associated pain, and controversies in management guidelines complicate the decision-making (6-8). Therefore, optimizing chest tube drainage duration is essential to avoid complications of both premature chest tube removal (e.g., pneumothorax, fluid re-accumulation, chest tube re-insertion) and unnecessarily prolonged drainage which leads to patient suffering and extended hospital stay (4).

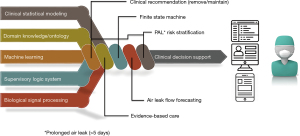

Current research has documented discrepancies in clinical decision-making related to chest tube management, often influenced by training level and preferences based on years of experience (7,9). Clinical decision support systems (CDSS) use patient-specific data and algorithms to produce refined clinical decisions for healthcare teams at the point of care (10,11). The limits on analysis of the massive amount of monitoring data created as part of routine surgical care have been long exceeded. Artificial intelligence (AI) is desperately needed for its potential to analyze big data, aid in decision-making, and learn from its own mistakes (12,13). However, there are many challenges to AI clinical implementation, such as physicians’ and patients’ opacity in understanding its inner working algorithms. In addition, the accuracy and generalisability of AI-based decisions depend on the quality and representativeness of the data set used in the training process (14). It is also essential to consider the need for ongoing surveillance to monitor the algorithm and governance to ensure process transparency (15). Intelligent decision support systems can help healthcare teams manage patients with chest tube drainage and holds the promise of improved post-operative outcomes. This study is part of an ongoing research program to enhance the quality of care for patients who require chest tube drainage as illustrated in Figure 1. The research team developed and evaluated an artificial intelligence-based clinical decision support system (AI-CDSS): the Chest Tube Learning Synthesis and Evaluation Assistant (CheLSEA) that can generate predictions and recommendations as to when it would be considered safe to remove chest tube(s) after lung resection (16). The system utilized a forecasting model that could improve the timeliness of chest tube removal by up to 24 hours (17). The team also developed a user interface for healthcare workers to interact with CheLSEA in a clinical setting (Figure 2).

There is scant literature on perceptions that underlie AI-based technologies or identifies the most important considerations for their clinical adoption by healthcare professionals and acceptance by patients. Current surveys on patients’ perspectives towards AI highlighted that viewpoints may vary depending on the functionality of AI in various clinical settings and can evolve based on the information communicated to patients about the AI system (18). Prior to implementation of AI-CDSS, it is imperative to identify potential barriers perceived by healthcare workers and patients to AI technology implementation (19).

In this study, we first aimed to gain feedback from the surgical team on CheLSEA’s user-interface as part of our AI-CDSS continuous quality improvement process. Secondly, we explored the general knowledge and perceptions of patients regarding AI-CDSS and their use in postoperative care.

We hypothesized that healthcare team members would understand the various elements of the user interface and agree with their relevance and usefulness. We also postulated that patients would be open-minded to AI contributing to their care whilst having a limited understanding of its potential role. We present this article in accordance with the SURGE (The Survey Reporting Guideline) reporting checklist (available at https://ccts.amegroups.com/article/view/10.21037/ccts-22-11/rc).

Methods

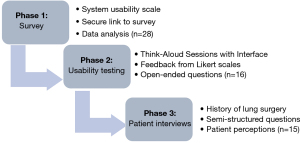

This mixed-methods study collected qualitative and quantitative data through surveys, system usability testing, and semi-structured interviews (Figure 3). This approach was selected to provide a comprehensive understanding of potential issues and barriers that may interfere with the implementation of CheLSEA (20,21).

This project was carried out in three stages: (I) a feedback survey from healthcare professionals who are potential CheLSEA system users (Appendix 1), (II) an interactive usability session with potential users conducted by direct observation using a think -aloud technique, followed by interviews using closed (Five point-Likert scale and multiple choice questions) and open-ended questions organized in a structured worksheet (Appendix 2), (III) Semi-structured interview with patients to learn about their attitudes toward the use of AI-CDSS in chest tube care (Appendix 3). Collected data was analyzed after each stage. Results informed further evaluation and improvements of CheLSEA system and its user-interface. Purposive sampling was used to select our participant samples, this technique would allow the exploration of feedback from potential end-users and recipients on CheLSEA’s clinical implementation (22). The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by Ottawa Health Science Network-Research Ethics Board (OHSN-REB) reference #20180555-01H and informed consent was taken from all individual participants.

Development of CheLSEA & user interface

The CheLSEA

The computer engineering principles and methodology underpinning the development of an operational system and its preliminary performance evaluation have been described in detail elsewhere (23). CheLSEA is an AI-CDSS prototype that combines statistical modeling, machine learning algorithms, clinical evidence, and expert knowledge (Figure 1). CheLSEA integrates various follow-up measurements post-operatively to provide chest tube removal recommendations in several future 12 hours’ time frames (16). Data points include: demographic, physiologic (air leak, fluid output), radiologic data (chest X-ray grading of pneumothorax, pleural effusion, and subcutaneous emphysema), and clinical status with a physical examination by a team member.

The validation of predictions prior to invoking the built-in safety protocol shows that our model can offer robust performance (low variation), accurate classifications [80 sensitivity, 90+ specificity, 95+ area under the curve (AUC)], and safe recommendations (<10% false chest tube removals) (23).

Currently, at our institution, the standard of chest tube care depends on patient’s clinical status, the physical exam for subcutaneous emphysema and follows a well-defined protocol. Protocol is defined by an air leak target of 30 mL/min over 8 hours, pleural fluid output volume (less than five times body weight in kilograms in a 24-hour period, the status of subcutaneous emphysema, and results of chest X-ray including pneumothorax or pleural effusion (4,24). The issue of safeguards against potentially unsafe recommendations from the system has been addressed by creating a fail-safe component consisting of a rule-based, finite-state machine (FSM). The FSM can override classifications made by CheLSEA that are deemed non-compliant with the clinical protocol.

CheLSEA’s user-interface

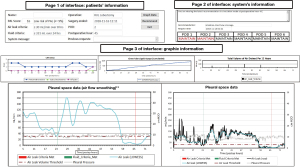

To implement CheLSEA in a clinical setting, the research team developed a user interface (Figure 2) to provide an interactive digital tool for healthcare teams to monitor data collected from the chest tube drainage systems and to access the AI-CDSS recommendations in real-time. The research team including a practicing thoracic surgeon, computer scientist, and research associates, developed the interface. The interface consists of three main sections with pertinent data within each section: (I) patient’s information: includes information about the patient, the lung surgery performed, and threshold cut-offs for the adopted clinical criteria, (II) system’s information: displays CheLSEA’s chest tube removal recommendations, (III) graphic information: displays physiological and radiological data extracted from the chest tube drainage system graphically. The main page’s design allows users access to all the components of the interface.

Phase 1: survey of CheLSEA potential users

Among potential end-users, a survey was conducted to gather feedback on the acceptance and perceptions of a new AI-based CDSS, such as CheLSEA. The survey questions were modified based on the System Usability Scale (25) to obtain information on the initial usability level of CheLSEA. The survey collected input on the necessity and utility of the CheLSEA system and its perceived influence on workload (i.e., adding extra daily tasks) and workflow (i.e., fits within the provider’s work routine), as well as its impact on patient care and its potential in clinical practice. Information was collected by answering multiple-choice questions and entering comments in open-text fields. A demographic question section asked participants about their healthcare professional role. The survey was pretested with professionals from our department for content validity and ease of completion. The survey was distributed electronically using a secure link to potential system users within the Thoracic surgery department at the Ottawa Hospital. Recipients eligible to participate were team members caring for patients with chest tubes including staff surgeons, resident surgeons, nurses, and research associates.

Phase 2: usability sessions and interviews of potential users

Inclusion criteria required that the participants have no prior exposure to the design of CheLSEA or its interface. An invitation to participate was advertised in our surgical unit through physical posters and emails (sent once a week for 4 weeks). The nurse manager coordinated the session dates and times to avoid conflict with the participant’s workflow. Interviews were planned to continue until saturation of newly identified information was reached (26). Once the session began, the moderator continuously reminded participants to continue talking and was allowed to interact with participants, as per published recommendations (27). In addition, while observing the participants using the interface, the moderator documented hesitancy in navigating any interface section and then asked the participant to clarify potential causes for hesitancy. All sessions were conducted one-on-one with a moderator, using a standardized script and structured worksheet. Each session was audio recorded and began with a think-aloud component and was followed with a semi structured discussion using open-ended questions analyzed thematically and closed-ended questions using five-point Likert scales (1 strongly agree to 5 strongly disagree) and multiple-choice questions. Using the structured worksheet, participants were asked questions to collect feedback on CheLSEA’s interface and the impact of its elements on patient care. Next, open-ended questions were used to probe the participants to share general attitudes, the usefulness of various interface components, barriers to using the interface, and recommendations for improvement. The think-aloud usability approach asks participants to verbalize their thought process while exploring each interface component and performing specified tasks navigating the interface (27). To familiarize each participant with the process, the moderator provided written and oral instructions before each think-aloud session.

Phase 3: patient interviews

Patients who had surgery for lung cancer at our department in the last 2 years were retrospectively identified for recruitment in the study. Individuals were eligible for this interview phase if they had lung surgery with post-operative chest tube management. Exclusion criteria included age younger than 18 years, inability to speak and read either English or French, not interested in AI research and patients who required chest tube management for pathology reasons other than post-operatively.

Interviews were conducted one-on-one by telephone or videoconferencing due to restrictions imposed by the COVID-19 pandemic. All interviews were conducted in Canada by one researcher (A.M) who was trained by an expert on qualitative research (S.G.N.). Interview guides were developed, sent for multiple rounds of revision, and tested with research team members. The final guide (Appendix 3) consisted of three main sections: (I) patients’ experience with their chest tube management post-operatively; (II) their perceptions of issues relevant to CheLSEA as an AI-CDSS, and (III) their opinions on the use of CheLSEA in the care of patients with chest tubes. The interviews were intended to be a single, hour-long session. They began with a brief foundational education on AI, clinical decision systems and an explanation of the purpose and objectives of the study. All participants provided informed consent and were not compensated for participating in the study. Interviews were audio-recorded with consent and transcribed verbatim by the software NVivo (version 12). A team member (AM) reviewed the transcripts for accuracy.

Data analysis

Quantitative analysis

The questions posed had responses on multiple choice or five-point Likert scales (ranging from 1 for “strongly disagree” to 5 for “strongly agree”). This scale was used throughout the three phases of the study to summarize participants’ demographic characteristics, the initial user’s perceptions towards AI-CDSS, the number of hesitations in completing a CheLSEA interface task, and the ability to interpret some components of the CheLSEA interface. The data was analyzed using simple, descriptive statistical analysis (percentages and demographic frequencies) using software from SPSS Statistics (Version23, IBM Corp, Armonk, NY).

Qualitative analysis

The study sought to identify a range of user and patient perspectives instead of developing an underlying theory. It was determined to analyze the free text answers (phase 1), the transcripts from user open-ended questions interviews (phase 2) and patient interviews (phase 3) using thematic analysis in a stepwise manner as described by Braun and Clark (28). This method’s flexibility and descriptive characteristics made it superior to existing alternative approaches. As part of the coding process, two coders (AM and SG) independently reviewed and analyzed the transcripts to elicit common topics from the interviews. Initial themes were generated by reading transcripts line by line and linked with meaningful texts, then grouped into categories based on patterns in the data to form subthemes. Related subthemes were grouped into categories to form more broad themes. Theme development was an iterative process that repeatedly returned to the raw data for imputation. Both reviewers met to compare and assess agreement on themes and subthemes. All transcripts were thoroughly discussed and were revised until disagreements were resolved and a consensus was achieved. Final analyses were presented to the team for any final revisions.

Results

Survey feedback (phase 1)

A total of 28 participants responded to the survey (Appendix 1) including 25 nurses, 2 thoracic surgeons and 1 research associate. Over half (15/28; 54%) of respondents were unsure about the need for such an AI-CDSS (responded maybe), while most participants (17/28; 61%) believed that such a decision support system would have the potential to improve care and positively impact patient care (16/28; 57%). In addition, the usefulness of the AI-CDSS was well perceived, most participants (18/28; 64%) stated that it would be helpful in their practice (Table 1). Regarding the relevant impact of the decision support system on clinical workflow, most respondents (17/28; 61%) indicated that the system would have a medium impact on workflow. Furthermore, 39% (11/28) responded that the system would highly impact their daily workload (Table 1).

Table 1

| Questions | N [%] |

|---|---|

| How would you rate the usefulness of an AI-CDSS as described? | |

| Not helpful | 2 [7] |

| Neutral | 8 [29] |

| Helpful | 18 [64] |

| How would using the AI-CDSS impact your daily workload? | |

| Low impact | 9 [32] |

| Medium impact | 8 [29] |

| High impact | 11 [39] |

| How do you think the AI-CDSS will impact chest tube care? | |

| Negative impact | 7 [25] |

| Neutral | 5 [18] |

| Positive impact | 16 [57] |

| How would using the AI-CDSS impact your daily workflow? | |

| Low impact | 8 [29] |

| Medium impact | 17 [61] |

| High impact | 3 [11] |

| Do you think there is a need for an AI-CDSS to assist in the management of patients requiring chest tube drainage? | |

| No | 5 [17] |

| Maybe | 15 [54] |

| Yes | 8 [29] |

| Do you think that this research and development has the potential to improve the care of patients requiring chest drainage? | |

| No | 3 [11] |

| Not sure | 8 [29] |

| Yes | 17 [61] |

AI-CDSS, Artificial Intelligence based Clinical Decision Support System.

Several participants (7/28; 25%) misunderstood the nature of CheLSEA and were sceptical about its ability to improve the care of patients with chest tube. Concerns were raised that such a system could potentially replace the healthcare provider’s best clinical judgment. This was captured by the following quotes: “Automating patient care will negatively impact patients by removing accountability from the healthcare workers delivering care”, and “Medical staff should be relying on their physical assessment and evaluation and make an educated decision based on their knowledge”.

System usability evaluation findings (phase 2)

A total of 16 participants, 11 nurses, 3 resident surgeons, and 2 research team members participated in one-on-one think-aloud usability testing of the CheLSEA interface (Appendix 2). The frequency of hesitations navigating various CheLSEA interface components is presented in Table 2. In summary, the PAL score, request time, graph data button, pleural space data, pleural space (smoothing), and chest X-ray graph all revealed usability issues. Half of the participants (8/16; 50%) found the PAL score data difficult to interpret and felt unsure about its utility and relevance to clinical care. This was a common theme among participants who expressed uncertainty regarding the meaning and function of the PAL score. All users were able to identify the ‘Graph Data’ button. However, seven participants (7/16; 44%) requested help before clicking the ‘Graph Data’ button as they were hesitant to do so. Most users presented positive impressions regarding the patient’s and system’s interface elements describing them as straightforward, clear, and effective. This was also reflected in their responses where 81% (13/16) of participants strongly agreed or agreed that the patient’s and system’s interface elements were easy to read (strongly agree: 4/16; 25%, agree: 9/16; 56%). Furthermore, 69% (11/16) of participants strongly agreed or agreed that the information presented by the first two pages of the interface were easy to understand (strongly agree: 3/16; 19%, agree: 8/16; 50%). Only two participants expressed negative impressions regarding those two interface elements. After navigating through the first two pages of the interface, users were unsure how to proceed. It was not fully clear to them that clicking on the “Graph button” would allow them to obtain more information. Concerns were raised related to the definition of some terminologies on the graphs, such as the distinction between air leak flow curves representing raw and mathematically smoothed air leak flow data, and the colors used for the various graphs.

Table 2

| CheLSEA interface components | Hesitancy on task, n [%] | Think-aloud users comments |

|---|---|---|

| Patient’s information | ||

| PAL score | 8/16 [50] | Uncertainty for the PAL score meaning |

| Air leak criteria | 3/16 [19] | Standardize the duration display format |

| Fluid criteria | 3/16 [19] | Change duration display to over 12 h |

| System’s information | ||

| Recommendation | 1/16 [6] | Participant curious about the process to reach recommendation |

| Request time | 4/16 [25] | Clarify time: add AM/PM option |

| Recommendation expires | 3/16 [19] | Clarify time: add AM/PM option |

| POD table | 3/16 [19] | Participants expressed difficulty understanding the table |

| Graph data button | 7/16 [44] | Participants were hesitant & requested help before clicking the graph button |

| Graph’s information | ||

| Pleural space data | 7/16 [44] | Difference between air leak (LOWESS) vs. air leak (Raw). Changing choice of colors |

| Pleural space (smoothing) | 5/16 [31] | Meaning of air leak (LOWESS). Comment on changing choice of colors |

| CXR graph | 4/16 [25] | How are the events graded. Uncertainty about the subcutaneous emphysema line overlap |

| Chest tube liquid output | 1/16 [6] | N/A |

| Total volume of air drained per 12 h | 1/16 [6] | X-axis values not clear |

CheLSEA, Chest tube Learning Synthesis and Evaluation Assistant; PAL, prolonged air leak; POD, post-operative day; LOWESS, locally weighted scatterplot smoothing; CXR, chest X-ray.

Challenges with visibility, understandability and hesitancy in navigation impeded the participants’ operation of interface. Spending time navigating and interpreting different components of the interface increased the participant’s workload. Also, unfamiliarity with terminologies used was an added burden that disrupted workflow. Simplifying the graphs and defining any ambiguous terminologies was thought to improve workflow and facilitate the integration of CheLSEA into the provider’s daily work. Further, they requested to have an option to have the data as numerical values in addition to the graph display.

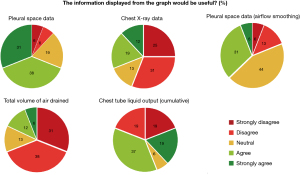

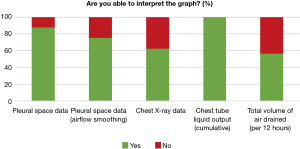

The ability to interpret information provided to support the user’s clinical decision-making was referred to as usability. Based on the participants’ responses to the graphs’ usability, more participants chose “strongly disagree” and “disagree” more than “strongly agree and agree” for both the total volume of air drained and the chest X-ray graphs (11/16; 69% and 9/16; 56% respectively) (Figure 4). Participants responded that they were not able to interpret the “total volume of air drained” graph (7/16; 44%) and the chest X-ray graph (6/16; 37.5%) (Figure 5).

Thematic analysis of the current version of CheLSEA’s user interface revealed 6 major themes including: visibility, understand-ability, usability, navigation, workflow, and usefulness. Themes are described with summarized user comments and recommendations in Table 3. For visibility, the complexity of the graphs displaying multiple curves at once and the colors used to present the curves were troublesome. Participants were confused by the overlap between the curves and missed important threshold lines because of the light colors used. Improving the design through separating the curves and optimizing the axis format and colors is needed to enhance visibility. For example, nine participants didn’t understand the meaning of clinically important terms “PAL score” and Air leak LOWESS used throughout the interface. Further, participants stated that adding definitions to the labels and acronyms used will help to fully comprehend the information and graphs presented (Understand-ability).

Table 3

| Themes | Description | User comments | Recommendations |

|---|---|---|---|

| Visibility | The display of system’s text, lines, or graphs. Standards in universal product design must be followed across all components | • Overlap between multiple curves on same graph creates confusion | • Optimize the major and minor gridlines of the x and y axis |

| • Difficulty reading graph because of the colors used to label the curves and the graph’s axis format | • Change the colors used to label the graphs to improve visibility | ||

| • Separate curves within each graph to avoid overlap | |||

| Understand-ability | The information displayed by the system is easy to comprehend Refers to text, labels, and instructions of the interface components | • Lack of clarity regarding the definition of some acronyms or graph’s labels used | • Add definitions and content descriptions to terminologies used |

| • Define labels and scale to help interpret the graphs | |||

| Usability | The user’s perception and the level that CheLSEA improves their clinical decision making and caring for patients | • Uncertainty of generalizability for all patients and subgroups | • Offer educational sessions for healthcare provider to fully understand the different components of the system, how is decision made, and to build credibility for the system |

| • Concern that the system will replace role of physical and clinical judgement | |||

| • System could negatively impact accountability towards decision | |||

| Navigation | The level of ease by which users navigate the interface platform | • Confusion on where to press to proceed to the next section of the interface | • The system, should include instructions on when and why to navigate certain component of the system |

| Workflow | The interface fits within the regular workflow of the care provider | • Interpretation of graphs adds more time and increase workload | • Simplify the graph presentation to improve workflow |

| • Unfamiliarity with terminologies used impedes understanding of information and disrupt workflow | • Add definitions to terminologies used as a pop-up window | ||

| Usefulness | The user can use the interface with ease, and information provided is useful for care | • More information is provided than what is needed | • Re-design the interface to allow for selective access to extra information available |

| • Absence of numerical data presentation. Data only provided as graphs | • Add the raw data of parameters in an organized way | ||

| • Omit redundant information |

CheLSEA, Chest tube Learning Synthesis and Evaluation Assistant.

The perception of the role of CheLSEA in improving patient care was perceived differently by all participants. Participants frequently mentioned CheLSEA’s potential to aid in the complex decision-making process surrounding chest tube care. However, some participants were concerned about the specificity of system, and whether the healthcare providers would efficiently integrate their assessment skills with this support system. To mitigate these concerns, participants requested educational sessions to understand the principles by which the recommendations are made and to utilize the various interface elements with ease.

Patient perspectives (phase 3)

Patients who previously underwent lung resection surgery between April 2020 and March 2022 [e.g., (9/15; 60%) lobectomy or (6/15; 40%) wedge resection] shared their perspectives. Participants were recruited until March 2022 to provide perspectives during one-on-one interviews with a single session over the phone or by videoconferencing lasting between 27 and 48 min. A total of 15 patients participated, aged between 50–71 years, composed of 7/15 (47%) females and 8/15 (53%) males, with education levels ranging from high school to postgraduate, and familiarity with AI levels ranged from 5/15 (33%) somewhat familiar, familiar 7/15 (47%) to intermediate knowledge 2/15 (13%) and advanced knowledge 1/15 (7%) (Table 4).

Table 4

| Characteristics | Value (N=15), n [%] |

|---|---|

| Age (years) | |

| 50–59 | 5 [33] |

| 60–69 | 9 [60] |

| 70+ | 1 [7] |

| Education level | |

| High school | 3 [20] |

| College | 5 [33] |

| Undergraduate | 3 [20] |

| Postgraduate | 4 [27] |

| Familiarity with AI | |

| Somewhat familiar | 5 [33] |

| Familiar | 7 [47] |

| Intermediate knowledge | 2 [13] |

| Advanced knowledge | 1 [7] |

| Diagnosis | |

| Lung cancer | 15 [100] |

| Operation | |

| Lobectomy | 9 [60] |

| Wedge resection | 6 [40] |

| Sex | |

| Female | 7 [47] |

| Male | 8 [53] |

AI, artificial intelligence.

Thematic analysis revealed five major themes: optimistic attitudes towards CheLSEA as an AI-CDSS, implementation considerations, engagement levels and communication between patient and healthcare team, trust in the surgeon, and desirable benefits of AI-CDSS (Table 5). Participants viewed the development of an AI-CDSS as an opportunity to pioneer innovation in clinical decision making and enrich our healthcare system. Participants expressed a need for a dependable system that uses data to improve chest tube management decisions. They frequently expressed concerns on decision quality and the potential of such systems to produce errors or incorrect recommendations, these concerns were viewed as barriers to implementation of such systems in patient care. Although many participants agreed there was a need for implementing technology in health care, a common focus was on the implications of such systems for human interaction and bedside communication between patients and healthcare team members. Participants expressed a concern about technology superseding nurses or healthcare workers’ reliance on an automated system to monitor patients’ course in hospital.

Table 5

| Theme | Example quotes from patient interviews |

|---|---|

| Optimistic attitudes to AI-CDSS | • “It is not a perfect experience that I had, it was not perfect science when they decided to remove my tube, AI based systems might offer more precision, I think using data is a wonderful way to assist. It cannot be worse off; it is an added benefit.” |

| • “The more data that we have, the better-quality decisions, so it takes out guesswork and allows larger amount of data to be analyzed, which, you know, should help to improve the overall decision making.” | |

| • “Advancement and innovative technology such as this AI based system will put our Canadian healthcare system at a forefront.” | |

| Implementation considerations | |

| Additional monitoring/clinical vigilance | • “I know there’s glitches in such systems and errors are going to happen. That is why I expect someone to check, make sure that the system is actually doing what it is supposed to.” |

| • “It would increase my trust if the system could correct its own mistakes or have checkpoints.” | |

| • “There should be data overtime that compares physician’s judgement with the system’s judgement and correlate with complications.” | |

| Bedside context | • “It’s all great to have all these A.I. systems to help you, but it does not take the place of you observing your patient, the nurses who will be using it will somehow become way too dependent on it.” |

| • “I am all for advances in technology, however these systems are just tools and will not replace the moment where the surgeon, nurse or physiotherapist looks at you, sees you, and reassures you. You need both.” | |

| • “From a patient point of view, these algorithms can help in decision making if used properly, but I worry it would compromise the relationship with my healthcare team.” | |

| Engagement and communication between patient and healthcare team | • “Patients would be most comfortable if they had a back-and-forth discussion with the healthcare team and were given all the information about how the decision is being made by the machine and by the healthcare team.” |

| • “I would like to know what will happen beforehand, or why we are using this system, and how safe it is.” | |

| • “It is important for me as a patient to be well informed and know that an AI system is being used to take decisions about my care.” | |

| Trust in surgeon | • “My trust in such a system would be influenced by what my surgeons think and how confident they are in the recommendation produced by the system.” |

| • “If there is a discrepancy, I would not go with the AI, I would rely on the surgeon’s experience.” | |

| • “I think it is AI system and the doctor should come to agreement, but the final say goes to my doctor.” | |

| Desirable benefits of AI-CDSS | • “The system will personalize the decision to each patient, it will provide a unique decision based on my own variables rather than a generic guideline.” |

| • “I was operated on during COVID, I was terrified to get an infection during my stay at the hospital, if the AI system can get me home faster, I would be all for it.” | |

| • “In a world in which we have fewer health care professionals working in our health care centers, and of course, it's worse in small towns or remote areas, then yes, there is some benefit to this system.” |

AI, artificial intelligence; CDSS, clinical decision support system.

A common point of discussion in the interviews involved being informed on how the AI-CDSS is integrated in the decision-making process and what to expect from such recommendations. Being educated about the system with clear communication by the team was noted to help participants build trust with the system and feel more comfortable towards the recommendations generated.

Many participants discussed their trusting relationship with their surgeon and how it influences their overall confidence in an AI-CDSS recommendation. Participants’ level of trust in AI-CDSS recommendations was influenced by their surgeon’s attitude towards the system. In case of discrepancy between the AI-CDSS and the surgeon, all participants stated that they would rely on the surgeon’s decision. The use of an AI-CDSS in chest tube management was perceived to provide benefits ranging from improved clinical precision to informed integration of patient data into care. Participants emphasized the benefit of having such a system that provides clinical recommendations tailored to their own monitoring data. A system that could improve safety and shorten length of stay was deemed potentially beneficial. Participants also felt that such systems could be useful in centres with limited access to specialty services.

Discussion

Investigators have emphasized the importance of evaluating CDSS from multiple angles (29-31). This mixed-methods study provides relevant insights into potential challenges that healthcare teams may face when implementing decision support systems for surgical patients. Direct involvement of healthcare providers throughout the development phase of CDSS has been shown to enhance their acceptance in clinical practice (32,33). However, the literature on patient perceptions of AI-CDSS is limited. Previous research has surveyed patients and the public to understand their viewpoints regarding the intersection of AI and healthcare (34-37). These studies were survey-based and provided variable perceptions on AI adoption in health care in general. Nevertheless, our research provides a unique contribution to the field by examining the perceptions of both end-users and patients regarding the role of AI-based systems in postoperative thoracic surgical care.

Barriers to the implementation of decision support interfaces include integration with the user’s workflow, the ability of the tool to meet user needs, and the lack of usability evaluations (38-42). Such barriers lead to low adoption rates by healthcare teams’ of these innovative technologies (43).

Feedback from potential end users identified the importance of clearly defining the role of CDSS to alleviate patient and healthcare personnel concerns, as well as facilitate implementation, and maximize the probability of sustained adoption. Our findings indicate that patients value innovation and are open to technological advances in clinical care. Our patient cohort commonly expressed optimism towards AI to leverage decision support systems and their potential benefits to patient care. However, a considerable proportion of patients and healthcare workers interviewed had apprehensions about the quality of recommendations and the system’s trustworthiness. There were concerns regarding the validity of the system’s recommendations, its impact on the patient-healthcare team relationship, and navigation of the user interface. The nature of real-life clinical practice necessitates collaboration between AI and its users rather than shifting responsibility to such systems (44,45). Enhanced clinical decision-making was demonstrated when clinical experts actively collaborated with AI (46-48).

Previous literature suggests that users of CDSS in other settings similarly expressed ease of navigation (49), layouts presentation (50), integration with workflow (51), access to supporting information (52) and system’s knowledge through training (53) as desirable qualities for system’s implementation. We hope that engaging potential users and patients in the system’s development will improve the trustworthiness and credibility of the clinical implementation of our system and increase its adoption (54). Other researchers developing AI-CDSS with an interface can benefit from the challenges and mitigation strategies identified in this study. This feedback is vital as the presentation and display of AI-CDSS to its fore-front users influences its perceived helpfulness (55,56).

The study findings need to be interpreted in light of important limitations. Firstly, the qualitative nature of the data with a small, single-institution sample size limits the result’s generalizability. Secondly, while participants were selected based on characteristics likely to reflect the end users and the target population (i.e., lung resection patients), randomized selection was not possible. This could have introduced uncontrolled bias in evaluating the user interface’s quality and the perceived utility of the system. In an attempt to reduce bias, usability sessions and patient interviews were completed with successive participants until saturation was reached and we stopped hearing new insights from participants (57). Despite these limitations, this research constitutes a worthwhile contribution to the field of thoracic surgery and to our knowledge, no prior studies have focused on AI-CDSS in chest tubes management. We encourage conducting larger multi-centre studies to assess the generalizability and replicability of our findings.

Conclusions

This study highlights the need to engage healthcare providers and patients to validate and evaluate clinical decision support systems and improve their clinical adoption while achieving the highest possible safety and quality. Further clinical assessment is needed to clarify the role and value of intelligent systems in caring for the surgical patient.

Acknowledgments

Funding: This research was financially supported by the Ottawa Hospital Academic Medical Organization (TOHAMO) Innovation Grants (No. TOH 15-001; No. TOH-21-20) from the Ministry of Health and 280 Long-term Care of Ontario.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Jean Bussieres and George Rakovich) for the series “Recent Advances in Perioperative Care in Thoracic Surgery and Anesthesia” published in Current Challenges in Thoracic Surgery. The article has undergone external peer review.

Reporting Checklist: The authors have completed the SURGE (The Survey Reporting Guideline) reporting checklist. Available at https://ccts.amegroups.com/article/view/10.21037/ccts-22-11/rc

Data Sharing Statement: Available at https://ccts.amegroups.com/article/view/10.21037/ccts-22-11/dss

Peer Review File: Available at https://ccts.amegroups.com/article/view/10.21037/ccts-22-11/prf

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://ccts.amegroups.com/article/view/10.21037/ccts-22-11/coif). The series “Recent Advances in Perioperative Care in Thoracic Surgery and Anesthesia” was commissioned by the editorial office without any funding or sponsorship. NJ reports grants for projects not related to this paper, royalties from book on machine learning evaluation from Cambridge University Press, as well as support for attending meetings, or for giving lectures or invited talks from DARPA, American University, Ben Gurion University, IRDTA, and AGH. SG reports funding for project related tasks from the Ottawa Hospital Academic Medical Organization (TOHAMO) Innovation Grant, funding for the project from the Ministry of Health and Long-term Care of Ontario, and grants for projects not related to this paper. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by Ottawa Health Science Network-Research Ethics Board (OHSN-REB) reference #20180555-01H and informed consent was taken from all individual participants.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Satoh Y. Management of chest drainage tubes after lung surgery. Gen Thorac Cardiovasc Surg 2016;64:305-8. [Crossref] [PubMed]

- Ziarnik E, Grogan EL. Postlobectomy Early Complications. Thorac Surg Clin 2015;25:355-64. [Crossref] [PubMed]

- Agostini P, Cieslik H, Rathinam S, et al. Postoperative pulmonary complications following thoracic surgery: are there any modifiable risk factors? Thorax 2010;65:815-8. [Crossref] [PubMed]

- French DG, Dilena M, LaPlante S, et al. Optimizing postoperative care protocols in thoracic surgery: best evidence and new technology. J Thorac Dis 2016;8:S3-11. [PubMed]

- Nayak R, Shargall Y. Modern day guidelines for post lobectomy chest tube management. J Thorac Dis 2020;12:143-5. [Crossref] [PubMed]

- Deng B, Qian K, Zhou JH, et al. Optimization of Chest Tube Management to Expedite Rehabilitation of Lung Cancer Patients After Video-Assisted Thoracic Surgery: A Meta-Analysis and Systematic Review. World J Surg 2017;41:2039-45. [Crossref] [PubMed]

- Zardo P, Busk H, Kutschka I. Chest tube management: state of the art. Curr Opin Anaesthesiol 2015;28:45-9. [Crossref] [PubMed]

- Shalli S, Saeed D, Fukamachi K, et al. Chest tube selection in cardiac and thoracic surgery: a survey of chest tube-related complications and their management. J Card Surg 2009;24:503-9. [Crossref] [PubMed]

- Chang PC, Chen KH, Jhou HJ, et al. Promising Effects of Digital Chest Tube Drainage System for Pulmonary Resection: A Systematic Review and Network Meta-Analysis. J Pers Med 2022;12:512. [Crossref] [PubMed]

- Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223-38. [Crossref] [PubMed]

- Hunt DL, Haynes RB, Hanna SE, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA 1998;280:1339-46. [Crossref] [PubMed]

- Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med 2019;25:44-56. [Crossref] [PubMed]

- Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2017;2:230-43. [Crossref] [PubMed]

- Etienne H, Hamdi S, Le Roux M, et al. Artificial intelligence in thoracic surgery: past, present, perspective and limits. Eur Respir Rev 2020;29:200010. [Crossref] [PubMed]

- Magrabi F, Ammenwerth E, McNair JB, et al. Artificial Intelligence in Clinical Decision Support: Challenges for Evaluating AI and Practical Implications. Yearb Med Inform 2019;28:128-34. [Crossref] [PubMed]

- Klement W, Gilbert S, Maziak DE, et al. Chest Tube Management After Lung Resection Surgery using a Classifier. In: 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA); 2019:432-41.

- Yeung C, Ghazel M, French D, et al. Forecasting pulmonary air leak duration following lung surgery using transpleural airflow data from a digital pleural drainage device. J Thorac Dis 2018;10:S3747-54. [Crossref] [PubMed]

- Khullar D, Casalino LP, Qian Y, et al. Perspectives of Patients About Artificial Intelligence in Health Care. JAMA Netw Open 2022;5:e2210309. [Crossref] [PubMed]

- Esmaeilzadeh P, Mirzaei T, Dharanikota S. Patients' Perceptions Toward Human-Artificial Intelligence Interaction in Health Care: Experimental Study. J Med Internet Res 2021;23:e25856. [Crossref] [PubMed]

- Greene JC, Caracelli VJ, Graham WF. Toward a Conceptual Framework for Mixed-Method Evaluation Designs. Educ Eval Policy Anal 1989;11:255-74. [Crossref]

- Walji MF, Kalenderian E, Piotrowski M, et al. Are three methods better than one? A comparative assessment of usability evaluation methods in an EHR. Int J Med Inform 2014;83:361-7. [Crossref] [PubMed]

- Palinkas LA, Horwitz SM, Green CA, et al. Purposeful Sampling for Qualitative Data Collection and Analysis in Mixed Method Implementation Research. Adm Policy Ment Health 2015;42:533-44. [Crossref] [PubMed]

- Klement W, Gilbert S, Resende VF, et al. The validation of chest tube management after lung resection surgery using a random forest classifier. Int J Data Sci Anal 2022;13:251-63. [Crossref]

- French DG, Plourde M, Henteleff H, et al. Optimal management of postoperative parenchymal air leaks. J Thorac Dis 2018;10:S3789-98. [Crossref] [PubMed]

- Grier RA, Bangor A, Kortum P. The System Usability Scale: Beyond Standard Usability Testing. Proc Hum Factors Ergon Soc Annu Meet 2013;57:187-91. [Crossref]

- Patton MQ. Qualitative Research & Evaluation Methods. Thousand Oaks, CA: SAGE; 2002: 692.

- Lundgrén-Laine H, Salanterä S. Think-aloud technique and protocol analysis in clinical decision-making research. Qual Health Res 2010;20:565-75. [Crossref] [PubMed]

- Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77-101. [Crossref]

- Gagnon MP, Desmartis M, Labrecque M, et al. Systematic review of factors influencing the adoption of information and communication technologies by healthcare professionals. J Med Syst 2012;36:241-77. [Crossref] [PubMed]

- Moja L, Liberati EG, Galuppo L, et al. Barriers and facilitators to the uptake of computerized clinical decision support systems in specialty hospitals: protocol for a qualitative cross-sectional study. Implement Sci 2014;9:105. [Crossref] [PubMed]

- Kilsdonk E, Peute LW, Jaspers MW. Factors influencing implementation success of guideline-based clinical decision support systems: A systematic review and gaps analysis. Int J Med Inform 2017;98:56-64. [Crossref] [PubMed]

- Ford E, Edelman N, Somers L, et al. Barriers and facilitators to the adoption of electronic clinical decision support systems: a qualitative interview study with UK general practitioners. BMC Med Inform Decis Mak 2021;21:193. [Crossref] [PubMed]

- Liberati EG, Ruggiero F, Galuppo L, et al. What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implement Sci 2017;12:113. [Crossref] [PubMed]

- Aggarwal R, Farag S, Martin G, et al. Patient Perceptions on Data Sharing and Applying Artificial Intelligence to Health Care Data: Cross-sectional Survey. J Med Internet Res 2021;23:e26162. [Crossref] [PubMed]

- York T, Jenney H, Jones G. Clinician and computer: a study on patient perceptions of artificial intelligence in skeletal radiography. BMJ Health Care Inform 2020;27:e100233. [Crossref] [PubMed]

- Stai B, Heller N, McSweeney S, et al. PD23-03 Public perceptions of ai in medicine. J Urol 2020;203:e464. [Crossref]

- Palmisciano P, Jamjoom AAB, Taylor D, et al. Attitudes of Patients and Their Relatives Toward Artificial Intelligence in Neurosurgery. World Neurosurg 2020;138:e627-33. [Crossref] [PubMed]

- Watson J, Hutyra CA, Clancy SM, et al. Overcoming barriers to the adoption and implementation of predictive modeling and machine learning in clinical care: what can we learn from US academic medical centers? JAMIA Open 2020;3:167-72. [Crossref] [PubMed]

- Ross J, Stevenson F, Lau R, et al. Factors that influence the implementation of e-health: a systematic review of systematic reviews (an update). Implement Sci 2016;11:146. [Crossref] [PubMed]

- Plumb M, Kautz K. Barriers to the integration of information technology within early childhood education and care organisations: A review of the literature. 2015;17.

- Saleem JJ, Patterson ES, Militello L, et al. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc 2005;12:438-47. [Crossref] [PubMed]

- Patterson ES, Doebbeling BN, Fung CH, et al. Identifying barriers to the effective use of clinical reminders: bootstrapping multiple methods. J Biomed Inform 2005;38:189-99. [Crossref] [PubMed]

- Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med 2012;157:29-43. [Crossref] [PubMed]

- Patel BN, Rosenberg L, Willcox G, et al. Human-machine partnership with artificial intelligence for chest radiograph diagnosis. NPJ Digit Med 2019;2:111. [Crossref] [PubMed]

- Chen PC, Gadepalli K, MacDonald R, et al. An augmented reality microscope with real-time artificial intelligence integration for cancer diagnosis. Nat Med 2019;25:1453-7. [Crossref] [PubMed]

- Park A, Chute C, Rajpurkar P, et al. Deep Learning-Assisted Diagnosis of Cerebral Aneurysms Using the HeadXNet Model. JAMA Netw Open 2019;2:e195600. [Crossref] [PubMed]

- Jain A, Way D, Gupta V, et al. Development and Assessment of an Artificial Intelligence-Based Tool for Skin Condition Diagnosis by Primary Care Physicians and Nurse Practitioners in Teledermatology Practices. JAMA Netw Open 2021;4:e217249. [Crossref] [PubMed]

- Seah JCY, Tang CHM, Buchlak QD, et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study. Lancet Digit Health 2021;3:e496-506. [Crossref] [PubMed]

- Zheng H, Rosal MC, Li W, et al. A Web-Based Treatment Decision Support Tool for Patients With Advanced Knee Arthritis: Evaluation of User Interface and Content Design. JMIR Hum Factors 2018;5:e17. [Crossref] [PubMed]

- Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13:5-11. [Crossref] [PubMed]

- Gosling AS, Westbrook JI, Coiera EW. Variation in the use of online clinical evidence: a qualitative analysis. Int J Med Inform 2003;69:1-16. [Crossref] [PubMed]

- Kuperman GJ, Bobb A, Payne TH, et al. Medication-related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc 2007;14:29-40. [Crossref] [PubMed]

- Bastholm Rahmner P, Andersén-Karlsson E, Arnhjort T, et al. Physicians' perceptions of possibilities and obstacles prior to implementing a computerised drug prescribing support system. Int J Health Care Qual Assur Inc Leadersh Health Serv 2004;17:173-9. [Crossref] [PubMed]

- Yang R, Wibowo S. User trust in artificial intelligence: A comprehensive conceptual framework. Electron Mark 2022;32:2053-77. [Crossref]

- Rajpurkar P, O'Connell C, Schechter A, et al. CheXaid: deep learning assistance for physician diagnosis of tuberculosis using chest x-rays in patients with HIV. NPJ Digit Med 2020;3:115. [Crossref] [PubMed]

- Kim HE, Kim HH, Han BK, et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health 2020;2:e138-48. [Crossref] [PubMed]

- Saunders B, Sim J, Kingstone T, et al. Saturation in qualitative research: exploring its conceptualization and operationalization. Qual Quant 2018;52:1893-907. [Crossref] [PubMed]

Cite this article as: El Adou Mekdachi A, Strain J, Nicholls SG, Rathod J, Alayche M, Resende VMF, Klement W, Japkowicz N, Gilbert S. Developing an artificial intelligence-based clinical decision-support system for chest tube management: user evaluations & patient perspectives of the Chest Tube Learning Synthesis and Evaluation Assistant (CheLSEA) system. Curr Chall Thorac Surg 2023;5:35.